My Encounter With the Fantasy-Industrial Complex

9 min read

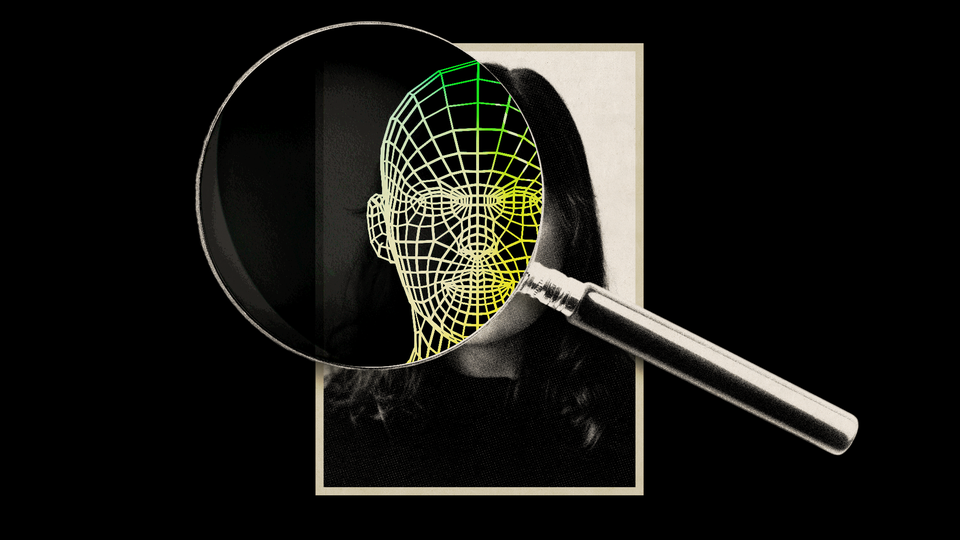

In an alternate universe, I run a sprawling cabal. Its goal, according to the contrarian newsletters, crank blogs, and breathless podcasts where the fantasy plays out—is to silence right-wing populists on behalf of the deep state.

Each morning, Google Alerts arrive in my inbox detailing the adventures of a fictional character bearing my name. Last month she starred in an article about “A Global Censorship Prison Built by the Women of the CIA.” In a Substack article headlined “Media Ruled by Robust PsyOp Alliance,” later posted on Infowars, an anti-vaccine propagandist implicated my alter ego in a plot to bring about a “One World Government.” A blog post titled “When Military Rule Supplants Democracy” quoted commentators who lumped her in with the “color revolution blob”—a reference to popular revolts against Russian-backed governments—and the perpetrators of “dirty tricks” overseas. You get the idea. Somewhat flatteringly, the commentators who make up these stories portray me as highly competent; one post on X credited the imaginary me with “brainwashing all of the local elections officials” to facilitate the theft of the 2020 election from Donald Trump.

The plotlines in this cinematic universe go back to the so-called Twitter Files—internal documents released to a group of writers after Elon Musk bought the social-media platform. Some of those writers have posited the existence of a staggering “Censorship Industrial Complex,” of which I am supposedly a leader. In written testimony for a March 2023 hearing of Representative Jim Jordan’s Select Subcommittee on the Weaponization of the Federal Government, the Substack writer Michael Shellenberger claimed that my cohorts and I “censored 22 million tweets” during the 2020 election. It also insinuated that I have CIA ties that I’ve kept “hidden from public view.” The crank theory that I am some kind of secret agent caught on. X users with follower counts in the tens of thousands or even hundreds of thousands started referring to me as “CIA Renee.” The mere mention of the character’s name—as with Thanos in the Marvel Cinematic Universe and Lex Luthor in the DC Extended Universe—became enough to establish villainy.

The actual facts of my life are less dramatic: When I was in college, I participated in a CIA scholarship program for computer-science majors. I worked at the agency’s headquarters during the summers, doing entry-level tasks, and left in 2004. Over the next decade and a half, I worked in finance and tech. At some point, I dropped my undergraduate internship from my résumé, just as I dropped having been on the ballroom-dancing team. But if my CIA past was supposed to be a secret, I kept it so poorly that, when Stanford University hired me in 2019, a colleague made a spy joke as he introduced me to a roomful of people at an event livestreamed on YouTube.

At the Stanford Internet Observatory (SIO), where I worked until recently, I studied the ways in which a variety of bad actors—spammers, scammers, hostile foreign governments, networks of terrible people targeting children, and, yes, hyper-partisans actively seeking to manipulate the public—use digital platforms to achieve their aims. From my brush with “CIA Renee” fantasists, I learned two things: First, being associated with public-interest research that has political implications can expose a person to vicious and in many cases bizarre attacks. Second, sweeping online conspiracy theories, far from dissipating upon contact with the real world, are beginning to reshape Americans’ political reality.

For Jordan’s subcommittee, tales about the censorship-industrial complex were a pretext to subpoena the emails that SIO employees, including me, exchanged with anyone at a tech company or in the executive branch of the United States government about social-media moderation or “the accuracy or truth of content.” Demands for documents were also issued to dozens of other academic institutions, think tanks, government agencies, and private companies. Meanwhile, conservative groups are suing my former colleagues and me. Stanford has run up huge legal bills. SIO’s future is unclear, and its effort to monitor election-related misinformation has been shelved.

In 2020, I helped lead the Election Integrity Partnership (EIP), a joint project by the Stanford Internet Observatory and other institutions that sought to detect viral misinformation about election procedures—such as exhortations to “text your vote”—as well as baseless claims about fraud intended to delegitimize the outcome. EIP worked with tech companies, civil-society groups, and state and local election-administration officials to assess evidence-free claims ricocheting around the internet, ranging from the mundane (ballots in a ditch) to the sensational (CIA supercomputers manipulating voting machines). The work was primarily done by student analysts. It was quite public: We posted frequently on our blog and on X to counter regular allegations of massive fraud.

But two years later, allegations about us began to spread across the right-wing-media ecosystem—including claims that our project had actually been masterminded by the Department of Homeland Security (run by Trump appointees at the time of the election) and executed in exchange for a National Science Foundation grant (that we applied for months after the election ended). As with most conspiracy theories, these claims contained grains of truth: Although the choice of whether to label a post as potential misinformation was never ours, we had, in fact, flagged some election-related tweets for social-media companies’ review. And a majority of the most viral false tweets were from conservatives—because a Republican president and his supporters were spreading lies to preemptively discredit an election that he then unquestionably lost. Subsequent events, including the January 6 riot, underscored why we were tracking election rumors to begin with: People riled up by demonstrably false claims can—and did—resort to violence.

To paint us as the bad guys, the people spinning tales about a censorship-industrial complex need their audience to accept an absurdly expansive definition of censorship. Strictly speaking, the term applies when the government prohibits or suppresses speech based on its content. Some people reasonably interpret censorship more broadly, to include when privately owned social-media platforms take down posts and deactivate accounts. But in Michael Shellenberger’s written testimony to Jim Jordan’s committee, merely labeling social-media posts as potentially misleading is portrayed as a form of censorship. Fact-checking, by his standard, is censorship. Down-ranking false theories—reducing their distribution in people’s social-media feeds while allowing them to remain on a site—is censorship. Flagging content for platforms’ review is censorship.

Beyond stretching terms, the writers of this drama also twisted facts beyond recognition. The claim that we censored 22 million tweets, for example, was based on a number cribbed from EIP’s own public assessment of our 2020 work. Our team had looked at some of the most highly viral delegitimization narratives of the election season—including “Sharpiegate” (which claimed that Trump voters were disenfranchised by felt-tip markers used to mark their ballots in Arizona) and the accusation that machines by Dominion Voting Systems had secretly changed votes. After the election, we counted up the total number of tweets that had been posted about the misleading claims we’d observed, and the sum was 22 million. During the campaign, our teams had tagged only 2,890 for review by Twitter. Of the social-media posts we highlighted, platforms took no action on 65 percent. Twenty-one percent received a warning label identifying them as potential misinformation. Just 13 percent were removed.

Those numbers are low—all the more so because some of the conspiracy narratives that EIP tracked were truly lunatic theories. After Fox News allowed commentators to lie about Dominion voting equipment on the air, the network ended up paying $787 million to settle a defamation lawsuit. But researchers flagging the story as it emerged—or a tech platform labeling posts about Dominion as “disputed”—is an egregious act of tyranny? Dominion and Sharpiegate were two of the most viral narratives of the 2020 election; Twitter users posted millions of times about them. If CIA Renee’s cabal had plotted to banish these subjects from the public discourse, it failed.

As the congressional scrutiny of EIP’s work intensified and the conspiracy-mongering worsened, I started hearing from climate scientists who told me they’d endured similar efforts to discredit their work. I read books such as Naomi Oreskes’s Merchants of Doubt and Michael Mann’s The Hockey Stick and the Climate Wars, which describe a world of smear campaigns powered by cherry-picked emails and show hearings. The incentives of the internet today make situations like the climate scientists’—and mine—ever more likely. Internet platforms offer substantial financial rewards to would-be influencers who dream up wild stories about real people. Enthusiastic fans participate, adding their own embellishments to highly elastic narratives by seizing on any available scrap of information. Even the loosest possible connection between two people—a shared appearance on a podcast or panel, a “like” on a random tweet—can become proof of complicity.

I have tried to correct the record, with little success. Conspiracy theories are thrilling; reality is not. Refuting every ridiculous claim (22 million censored tweets!) takes a paragraph of painstaking explanation. Even though the censorship fantasists have yet to explain what it is that the CIA uses me for today, I’m expected to prove that I’m not a spy. After I wrote a blog post clarifying that my CIA affiliation was during my undergraduate days, Shellenberger pivoted to calling me “‘Former’ CIA Fellow Renee DiResta.” The scare quotes imply that I might not be telling the truth about no longer working at the agency. He went on to inform his audience ominously that “multiple people” have told him that once you enter the intelligence community, you never really leave.

Except that you do. The CIA stops paying you. You lose your security clearance. Only in the movies do spy bureaucracies have the prescience to groom undergraduates for future secret missions leveraging yet-to-be-invented technology. But the fantasy plot is built on innuendo, and innuendo is hard to stamp out. (Shellenberger has yet to respond to a request for comment.)

The cinematic universe that CIA Renee inhabits is entertaining for the audience and, I presume, profitable for the writers, who get to portray themselves to their online followers and paying subscribers as heroes in a quest to defeat shadowy enemies. But the harm to the people whom the characters are based on is real. Some rapt fans come to believe that they’ve been wronged. This perception is dangerous. The people who email me death threats sincerely believe that they are fighting back against a real cabal. This fiction is their fact.

I have three children. The older two overhear their father and me laughing about the adventures of CIA Renee and mocking the writers’ tortured prose, but my kids sense some force that they don’t fully understand and that feels malicious. My 10-year-old does Google searches to see what people are saying about his mother. Over dinner one night, he volunteered: “Some people on the internet really don’t like you.” This is true, I told him. And sometimes that is a badge of honor.

Another night we talked about Congress; he was studying how a bill becomes a law. “Passing laws is not what Congress does anymore,” I said wryly, and we laughed—because I’ve taught him things far outside an elementary-school curriculum that still describes governance in a shared reality. I told him that hearings have become political theater—performance art for legislators more interested in dunking on their enemies for internet points than in governing the country. What I haven’t discussed, and what I hope he doesn’t see, are the calls for retribution over fake sins that serve as plot points in the cinematic universe.

In the past few years, many other unsuspecting people have been turned into characters in conspiracy stories. Obsessive online mobs have come for tech employees who previously expressed political opinions. Poll workers and election officials who were recorded on video doing their jobs in 2020—only to be accused by henchmen for the president of the United States of manipulating an election—have had to flee their homes. This can happen to anyone.

Once established, characters never stop being useful. The story simply evolves around them. Indeed, as November’s election approaches, the plotlines have begun to morph once again; it’s time to start the next season of this show. The cost of becoming a character is borne by the target alone, but the cost of fantasy replacing reality affects us all.