Election Disinformation Is Getting More Chaotic

7 min read

This is an edition of The Atlantic Daily, a newsletter that guides you through the biggest stories of the day, helps you discover new ideas, and recommends the best in culture. Sign up for it here.

Earlier this month, as hurricanes ravaged parts of the Southeast, Donald Trump, Elon Musk, and Marjorie Taylor Greene were among those amplifying dangerous disinformation about the storms and recovery efforts. The ensuing social-media chaos, as my colleague Elaine Godfrey has written, was just a preview of what we may see on and after Election Day. I spoke with Elaine, who covers politics, about what makes this moment so ripe for conspiracy theories, the ways online campaigns shape the real world, and how this all could still escalate soon.

Lora Kelley: In your recent story about the disinformation that spread after Hurricanes Helene and Milton, you warned that things would get even more chaotic around election time. What makes this moment so hospitable for disinformation?

Elaine Godfrey: A lot of the things going on now were not happening in the same way in 2020—and even then, we saw plenty of disinformation. One major development is that prominent Republican politicians have brought legal attacks on the institutions and government agencies that are trying to address disinformation. For example, the Stanford Internet Observatory, a think tank that studies the internet, has been effectively sued into oblivion for supposedly suppressing free speech. These lawsuits can have a chilling effect: Some research organizations aren’t doing as much as they could to combat disinformation; even labeling posts as disinformation becomes legally worrisome for their team.

Since 2020, we have also seen new organizations crop up—such as the Election Integrity Network—that promote conspiracy theories about and undermine confidence in American elections. It doesn’t help that big social-media companies like X and Meta have cut their content-moderation efforts, reducing the time and resources directed toward combating disinformation and false content on their platforms, whether it relates to elections or to hurricanes.

Then there are the recent world conflicts and crises involving Russia, Ukraine, Israel, Gaza, Iran, Lebanon, China. Though foreign actors have often tried to influence American elections in the past, they’ve ramped up their efforts, and recent wars and global tensions have given them new motivations for interfering in America’s political future. Take all of that and add generative AI, which has made major gains in the past two years, and it becomes a perfect storm for disinformation.

Lora: What types of disinformation and conspiracy theories have you seen proliferate in recent weeks—and how do you expect them to evolve as we get closer to the election and the weeks that follow?

Elaine: Usually, when conspiracy theories are successful, it’s because there is a grain of truth in them. But a lot of what I’m seeing lately does not even have that. Some of the posts surrounding the hurricane were just shockingly outlandish. Representative Marjorie Taylor Greene insinuated that Democrats had sent hurricanes toward Republican areas to influence the election cycle. A self-described “decentralized tech maverick” told Floridians that FEMA would never let them return to their homes if they evacuated.

Another trend is people with huge platforms claiming that they have received text messages from unnamed people who have detailed some explosive new information—but because these posts never name their sources, there’s no way to verify the allegations. A lot of that was going on with the hurricanes, some of which Elon Musk helped spread. Around the election, we’re going to see a lot of posts like: A friend of a friend at a polling place in Georgia saw something crazy and sent me this text—and there’s no number, no name associated.

Election officials are particularly worried about doctored headlines and images concerning polling-place times and locations. We’ve seen some of that before, but I expect that will be a bigger deal this time. On and after Election Day, the conspiracies will be weirder, and they will spread farther.

Lora: Who is affected in the real world when disinformation spreads online?

Elaine: During Hurricanes Helene and Milton, FEMA officials talked about how its agents were at risk, because there were all these awful and false rumors about what they were doing; FEMA actually limited some in-person community outreach because it was worried about the safety of its officials. Another big concern is that people might have heard a rumor that FEMA won’t help Republicans—which isn’t true, of course—and because of that, they might avoid seeking the government aid they’re entitled to.

When it comes to election-conspiracy mongering, the practical effect is that we have a lot of people who think our democratic process is not safe and secure. To be clear: America’s elections are safe and secure. Election workers are also in a really tough position right now. It’s not always Democrats getting targeted—in fact, we have seen and will continue to see a lot of diligent, honest Republican election officials being unfairly pressured by their own neighbors who’ve been hoodwinked by Trump and his allies about election integrity.

If Trump loses, many of his supporters will think it’s because the election was fraudulent. They will believe this because he and his political allies have been feeding them this line for years. And as we saw on January 6, that can be dangerous—and deadly.

Lora: Elon Musk has become a vocal Trump supporter, and he has personally amplified disinformation on X, recently boosting false claims about Haitians eating pets and the Democrats wanting to take people’s kids. How has he affected the way information is spreading in this election cycle?

Elaine: Elon Musk has millions of followers, and has reengineered X so that his posts pop up first. He has also been repeating false information: Recently he spoke at a town hall about Dominion voting machines and saidwhat a “coincidence” it was that Dominion voting machines are being used in Philadelphia and Maricopa County(which are both key population centers in swing states).

First of all, Dominion machines are not being used in Philadelphia; Philadelphia uses a different type of voting machine. And Dominion won $787 million settling a lawsuit against Fox News last year after the network engaged in this exact kind of talk. You would think that Musk would have learned by now that spreading fake news can be costly.

Lora: Is election disinformation only going to get worse from here?

Elaine: The good thing is that we’re better prepared this time. We know what happened in the previous presidential election; we understand the playbook. But tensions are really high right now, and there are so many ways for disinformation to spread—and spread far. It’s likely to get worse before it gets better, at least until companies reinvest in their disinformation teams, and our politicians, regardless of party, commit to calling out bad information.

Disinformation is meant to incite fear and muddy the waters. If you see something on social media that sparks an emotional reaction like fear or anger—whether it’s someone saying they’re being blocked from voting at their polling place or that a certain political party is transporting suitcases of ballots—check it out. Entertain the possibility that it’s not true. The likeliest explanation is probably the boring one.

Related:

- November will be worse.

- The new propaganda war

Here are four new stories from The Atlantic:

- Conspiracy theories in small-town Alabama

- Anne Applebaum: The case against pessimism

- What would a second Trump administration mean for the Middle East?

- The perverse consequences of tuition-free medical school

Today’s News

- Elon Musk pledged on Saturday to give $1 million each day until Election Day to registered swing-state voters who have signed Musk’s political action committee’s petition supporting the First and Second Amendments.

- Disney announced that Morgan Stanley’s CEO, James Gorman, will be the company’s new board chair in 2025, and that it will name a replacement for Bob Iger, its current CEO, in 2026.

- The Central Park Five members sued Donald Trump over the allegedly “false and defamatory” statements that he made about their case during the recent presidential debate.

Dispatches

- The Wonder Reader: Understanding the real difference between extroverts and introverts can help us better understand how personality is formed, Isabel Fattal writes.

Explore all of our newsletters here.

Evening Read

A Moon-Size Hole in Cat Research

By Marina Koren

Like many pet owners, my partner and I have a long list of nonsensical nicknames for our 10-year-old tabby, Ace: sugarplum, booboo, Angela Merkel, sharp claw, clompers, night fury, poof ball. But we reserve one nickname for a very specific time each month, when Ace is more restless than usual in the daytime hours, skulking around from room to room instead of snoozing on a blanket. Or when his evening sprints become turbocharged, and he parkours off the walls and the furniture to achieve maximum speed. On those nights, the moon hangs bright in the dark sky, almost entirely illuminated. Then, we call him the waning gibbous.

Read the full article.

More From The Atlantic

- Tripping on nothing

- A dissident is built different.

- Why the oil market is not shocked

Culture Break

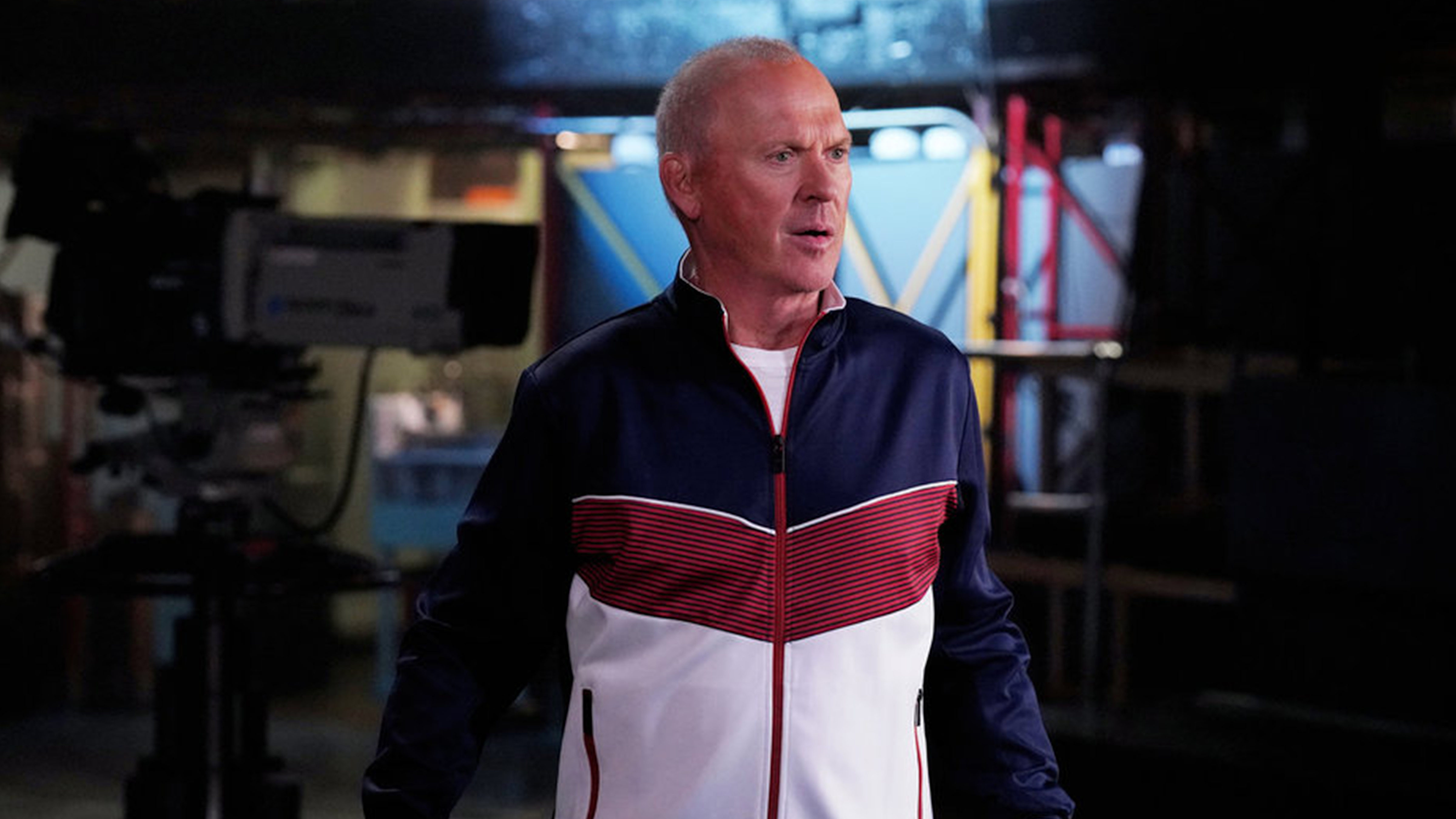

Watch. Michael Keaton’s recent performance on Saturday Night Live showed off his simple trick: He can go from “regular guy” to awkward eccentric in a heartbeat, Esther Zuckerman writes.

Debate. Is the backlash against Comic Sans, the world’s most hated font, finally ending?

Play our daily crossword.

Stephanie Bai contributed to this newsletter.

When you buy a book using a link in this newsletter, we receive a commission. Thank you for supporting The Atlantic.