The Fraudulent Science of Success

27 min read

For anyone who teaches at a business school, the blog post was bad news. For Juliana Schroeder, it was catastrophic. She saw the allegations when they first went up, on a Saturday in early summer 2023. Schroeder teaches management and psychology at UC Berkeley’s Haas School of Business. One of her colleagues—a star professor at Harvard Business School named Francesca Gino—had just been accused of academic fraud. The authors of the blog post, a small team of business-school researchers, had found discrepancies in four of Gino’s published papers, and they suggested that the scandal was much larger. “We believe that many more Gino-authored papers contain fake data,” the blog post said. “Perhaps dozens.”

The story was soon picked up by the mainstream press. Reporters reveled in the irony that Gino, who had made her name as an expert on the psychology of breaking rules, may herself have broken them. (“Harvard Scholar Who Studies Honesty Is Accused of Fabricating Findings,” a New York Times headline read.) Harvard Business School had quietly placed Gino on administrative leave just before the blog post appeared. The school had conducted its own investigation; its nearly 1,300-page internal report, which was made public only in the course of related legal proceedings, concluded that Gino “committed research misconduct intentionally, knowingly, or recklessly” in the four papers. (Gino has steadfastly denied any wrongdoing.)

Schroeder’s interest in the scandal was more personal. Gino was one of her most consistent and important research partners. Their names appear together on seven peer-reviewed articles, as well as 26 conference talks. If Gino were indeed a serial cheat, then all of that shared work—and a large swath of Schroeder’s CV—was now at risk. When a senior academic is accused of fraud, the reputations of her honest, less established colleagues may get dragged down too. “Just think how horrible it is,” Katy Milkman, another of Gino’s research partners and a tenured professor at the University of Pennsylvania’s Wharton School, told me. “It could ruin your life.”

To head that off, Schroeder began her own audit of all the research papers that she’d ever done with Gino, seeking out raw data from each experiment and attempting to rerun the analyses. As that summer progressed, her efforts grew more ambitious. With the help of several colleagues, Schroeder pursued a plan to verify not just her own work with Gino, but a major portion of Gino’s scientific résumé. The group started reaching out to every other researcher who had put their name on one of Gino’s 138 co-authored studies. The Many Co-Authors Project, as the self-audit would be called, aimed to flag any additional work that might be tainted by allegations of misconduct and, more important, to absolve the rest—and Gino’s colleagues, by extension—of the wariness that now afflicted the entire field.

That field was not tucked away in some sleepy corner of academia, but was instead a highly influential one devoted to the science of success. Perhaps you’ve heard that procrastination makes you more creative, or that you’re better off having fewer choices, or that you can buy happiness by giving things away. All of that is research done by Schroeder’s peers—business-school professors who apply the methods of behavioral research to such subjects as marketing, management, and decision making. In viral TED Talks and airport best sellers, on morning shows and late-night television, these business-school psychologists hold tremendous sway. They also have a presence in this magazine and many others: Nearly every business academic who is named in this story has been either quoted or cited by The Atlantic on multiple occasions. A few, including Gino, have written articles for The Atlantic themselves.

Business-school psychologists are scholars, but they aren’t shooting for a Nobel Prize. Their research doesn’t typically aim to solve a social problem; it won’t be curing anyone’s disease. It doesn’t even seem to have much influence on business practices, and it certainly hasn’t shaped the nation’s commerce. Still, its flashy findings come with clear rewards: consulting gigs and speakers’ fees, not to mention lavish academic incomes. Starting salaries at business schools can be $240,000 a year—double what they are at campus psychology departments, academics told me.

The research scandal that has engulfed this field goes far beyond the replication crisis that has plagued psychology and other disciplines in recent years. Long-standing flaws in how scientific work is done—including insufficient sample sizes and the sloppy application of statistics—have left large segments of the research literature in doubt. Many avenues of study once deemed promising turned out to be dead ends. But it’s one thing to understand that scientists have been cutting corners. It’s quite another to suspect that they’ve been creating their results from scratch.

Schroeder has long been interested in trust. She’s given lectures on “building trust-based relationships”; she’s run experiments measuring trust in colleagues. Now she was working to rebuild the sense of trust within her field. A lot of scholars were involved in the Many Co-Authors Project, but Schroeder’s dedication was singular. In October 2023, a former graduate student who had helped tip off the team of bloggers to Gino’s possible fraud wrote her own “post mortem” on the case. It paints Schroeder as exceptional among her peers: a professor who “sent a clear signal to the scientific community that she is taking this scandal seriously.” Several others echoed this assessment, saying that ever since the news broke, Schroeder has been relentless—heroic, even—in her efforts to correct the record.

But if Schroeder planned to extinguish any doubts that remained, she may have aimed too high. More than a year since all of this began, the evidence of fraud has only multiplied. The rot in business schools runs much deeper than almost anyone had guessed, and the blame is unnervingly widespread. In the end, even Schroeder would become a suspect.

Gino was accusedof faking numbers in four published papers. Just days into her digging, Schroeder uncovered another paper that appeared to be affected—and it was one that she herself had helped write.

The work, titled “Don’t Stop Believing: Rituals Improve Performance by Decreasing Anxiety,” was published in 2016, with Schroeder’s name listed second out of seven authors. Gino’s name was fourth. (The first few names on an academic paper are typically arranged in order of their contributions to the finished work.) The research it described was pretty standard for the field: a set of clever studies demonstrating the value of a life hack—one simple trick to nail your next presentation. The authors had tested the idea that simply following a routine—even one as arbitrary as drawing something on a piece of paper, sprinkling salt over it, and crumpling it up—could help calm a person’s nerves. “Although some may dismiss rituals as irrational,” the authors wrote, “those who enact rituals may well outperform the skeptics who forgo them.”

In truth, the skeptics have never had much purchase in business-school psychology. For the better part of a decade, this finding had been garnering citations—about 200, per Google Scholar. But when Schroeder looked more closely at the work, she realized it was questionable. In October 2023, she sketched out some of her concerns on the Many Co-Authors Project website.

The paper’s first two key experiments, marked in the text as Studies 1a and 1b, looked at how the salt-and-paper ritual might help students sing a karaoke version of Journey’s “Don’t Stop Believin’ ” in a lab setting. According to the paper, Study 1a found that people who did the ritual before they sang reported feeling much less anxious than people who did not; Study 1b confirmed that they had lower heart rates, as measured with a pulse oximeter, than students who did not.

As Schroeder noted in her October post, the original records of these studies could not be found. But Schroeder did have some data spreadsheets for Studies 1a and 1b—she’d posted them shortly after the paper had been published, along with versions of the studies’ research questionnaires—and she now wrote that “unexplained issues were identified” in both, and that there was “uncertainty regarding the data provenance” for the latter. Schroeder’s post did not elaborate, but anyone can look at the spreadsheets, and it doesn’t take a forensic expert to see that the numbers they report are seriously amiss.

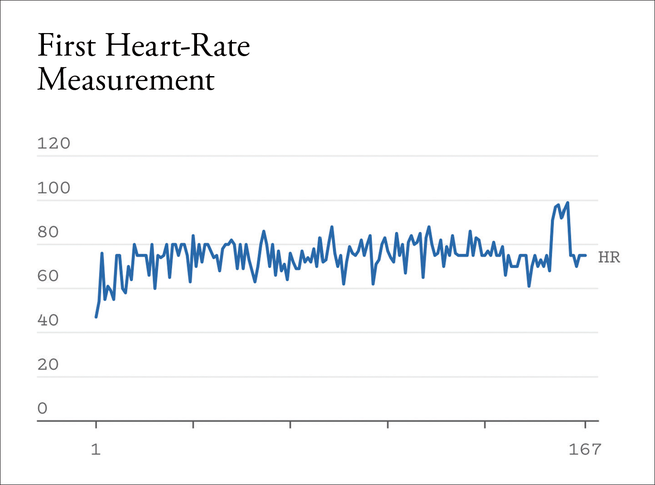

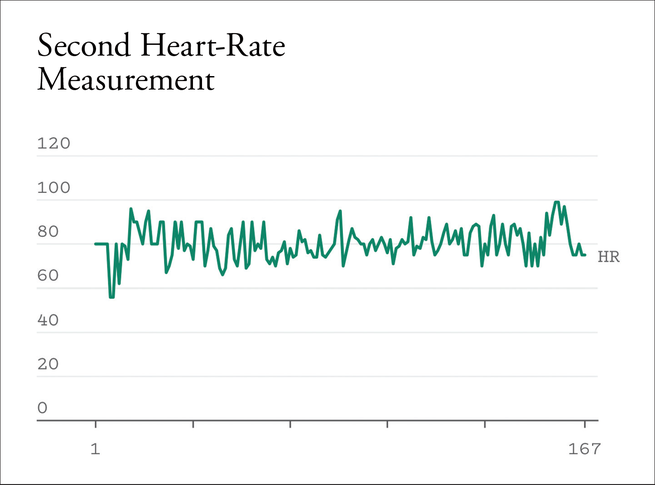

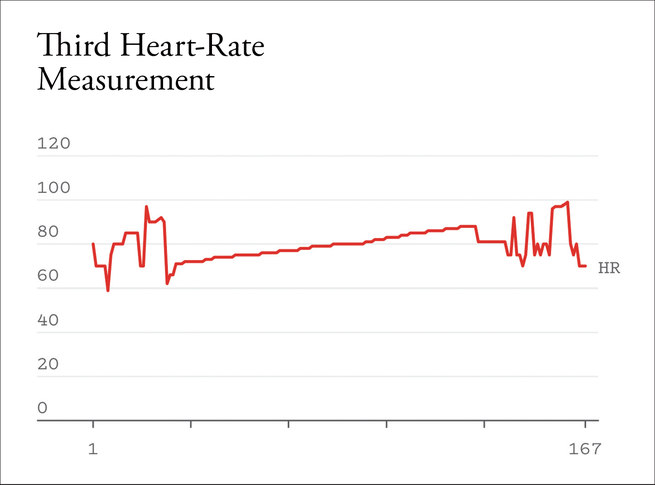

The “unexplained issues” with Studies 1a and 1b are legion. For one thing, the figures as reported don’t appear to match the research as described in other public documents. (For example, where the posted research questionnaire instructs the students to assess their level of anxiety on a five-point scale, the results seem to run from 2 to 8.) But the single most suspicious pattern shows up in the heart-rate data. According to the paper, each student had their pulse measured three times: once at the very start, again after they were told they’d have to sing the karaoke song, and then a third time, right before the song began. I created three graphs to illustrate the data’s peculiarities. They depict the measured heart rates for each of the 167 students who are said to have participated in the experiment, presented from left to right in their numbered order on the spreadsheet. The blue and green lines, which depict the first and second heart-rate measurements, show those values fluctuating more or less as one might expect for a noisy signal, measured from lots of individuals. But the red line doesn’t look like this at all: Rather, the measured heart rates form a series going up, across a run of more than 100 consecutive students.

I’ve reviewed the case with several researchers who suggested that this tidy run of values is indicative of fraud. “I see absolutely no reason” the sequence in No. 3 “should have the order that it does,” James Heathers, a scientific-integrity investigator and an occasional Atlantic contributor, told me. The exact meaning of the pattern is unclear; if you were fabricating data, you certainly wouldn’t strive for them to look like this. Nick Brown, a scientific-integrity researcher affiliated with Linnaeus University Sweden, guessed that the ordered values in the spreadsheet may have been cooked up after the fact. In that case, it might have been less important that they formed a natural-looking plot than that, when analyzed together, they matched fake statistics that had already been reported. “Someone sat down and burned quite a bit of midnight oil,” he proposed. I asked how sure he was that this pattern of results was the product of deliberate tampering; “100 percent, 100 percent,” he told me. “In my view, there is no innocent explanation in a universe where fairies don’t exist.”

Schroeder herself would come to a similar conclusion. Months later, I asked her whether the data were manipulated. “I think it’s very likely that they were,” she said. In the summer of 2023, when she reported the findings of her audit to her fellow authors, they all agreed that, whatever really happened, the work was compromised and ought to be retracted. But they could not reach consensus on who had been at fault. Gino did not appear to be responsible for either of the paper’s karaoke studies. Then who was?

This would not seem to be a tricky question. The published version of the paper has two lead authors who are listed as having “contributed equally” to the work. One of them was Schroeder. All of the co-authors agree that she handled two experiments—labeled in the text as Studies 3 and 4—in which participants solved a set of math problems. The other main contributor was Alison Wood Brooks, a young professor and colleague of Gino’s at Harvard Business School.

From the start, there was every reason to assume that Brooks had run the studies that produced the fishy data. Certainly they are similar to Brooks’s prior work. The same quirky experimental setup—in which students were asked to wear a pulse oximeter and sing a karaoke version of “Don’t Stop Believin’ ”—appears in her dissertation from the Wharton School in 2013, and she published a portion of that work in a sole-authored paper the following year. (Brooks herself is musically inclined, performing around Boston in a rock band.)

Yet despite all of this, Brooks told the Many Co-Authors Project that she simply wasn’t sure whether she’d had access to the raw data for Study 1b, the one with the “no innocent explanation” pattern of results. She also said she didn’t know whether Gino played a role in collecting them. On the latter point, Brooks’s former Ph.D. adviser, Maurice Schweitzer, expressed the same uncertainty to the Many Co-Authors Project.

Plenty of evidence now suggests that this mystery was manufactured. The posted materials for Study 1b, along with administrative records from the lab, indicate that the work was carried out at Wharton, where Brooks was in grad school at the time, studying under Schweitzer and running another, very similar experiment. Also, the metadata for the oldest public version of the data spreadsheet lists “Alison Wood Brooks” as the last person who saved the file.

Brooks, who has published research on the value of apologies, and whose first book—Talk: The Science of Conversation and the Art of Being Ourselves—is due out from Crown in January, did not respond to multiple requests for interviews or to a detailed list of written questions. Gino said that she “neither collected nor analyzed the data for Study 1a or Study 1b nor was I involved in the data audit.”

If Brooks did conduct this work and oversee its data, then Schroeder’s audit had produced a dire twist. The Many Co-Authors Project was meant to suss out Gino’s suspect work, and quarantine it from the rest. “The goal was to protect the innocent victims, and to find out what’s true about the science that had been done,” Milkman told me. But now, to all appearances, Schroeder had uncovered crooked data that apparently weren’t linked to Gino. That would mean Schroeder had another colleague who had contaminated her research. It would mean that her reputation—and the credibility of her entire field—was under threat from multiple directions at once.

Among the four research papersin which Gino was accused of cheating is one about the human tendency to misreport facts and figures for personal gain. Which is to say: She was accused of faking data for a study of when and how people might fake data. Amazingly, a different set of data from the same paper had already been flagged as the product of potential fraud, two years before the Gino scandal came to light. The first was contributed by Dan Ariely of Duke University—a frequent co-author of Gino’s and, like her, a celebrated expert on the psychology of telling lies. (Ariely has said that a Duke investigation—which the school has not acknowledged—discovered no evidence that he “falsified data or knowingly used falsified data.” He has also said that the investigation “determined that I should have done more to prevent faulty data from being published in the 2012 paper.”)

The existence of two apparently corrupted data sets was shocking: a keystone paper on the science of deception wasn’t just invalid, but possibly a scam twice over. But even in the face of this ignominy, few in business academia were ready to acknowledge, in the summer of 2023, that the problem might be larger still—and that their research literature might well be overrun with fantastical results.

Some scholars had tried to raise alarms before. In 2019, Dennis Tourish, a professor at the University of Sussex Business School, published a book titled Management Studies in Crisis: Fraud, Deception and Meaningless Research. He cites a study finding that more than a third of surveyed editors at management journals say they’ve encountered fabricated or falsified data. Even that alarming rate may undersell the problem, Tourish told me, given all of the misbehavior in his discipline that gets overlooked or covered up.

Anonymous surveys of various fields find that roughly 2 percent of scholars will admit to having fabricated, falsified, or modified data at least once in their career. But business-school psychology may be especially prone to misbehavior. For one thing, the field’s research standards are weaker than those for other psychologists. In response to the replication crisis, campus psychology departments have lately taken up a raft of methodological reforms. Statistically suspect practices that were de rigueur a dozen years ago are now uncommon; sample sizes have gotten bigger; a study’s planned analyses are now commonly written down before the work is carried out. But this great awakening has been slower to develop in business-school psychology, several academics told me. “No one wants to kill the golden goose,” one early-career researcher in business academia said. If management and marketing professors embraced all of psychology’s reforms, he said, then many of their most memorable, most TED Talk–able findings would go away. “To use marketing lingo, we’d lose our unique value proposition.”

It’s easy to imagine how cheating might lead to more cheating. If business-school psychology is beset with suspect research, then the bar for getting published in its flagship journals ratchets up: A study must be even flashier than all the other flashy findings if its authors want to stand out. Such incentives move in only one direction: Eventually, the standard tools for torturing your data will no longer be enough. Now you have to go a little further; now you have to cut your data up, and carve them into sham results. Having one or two prolific frauds around would push the bar for publishing still higher, inviting yet more corruption. (And because the work is not exactly brain surgery, no one dies as a result.) In this way, a single discipline might come to look like Major League Baseball did 20 years ago: defined by juiced-up stats.

In the face of its own cheating scandal, MLB started screening every single player for anabolic steroids. There is no equivalent in science, and certainly not in business academia. Uri Simonsohn, a professor at the Esade Business School in Barcelona, is a member of the blogging team, called Data Colada, that caught the problems in both Gino’s and Ariely’s work. (He was also a motivating force behind the Many Co-Authors Project.) Data Colada has called out other instances of sketchy work and apparent fakery within the field, but its efforts at detection are highly targeted. They’re also quite unusual. Crying foul on someone else’s bad research makes you out to be a troublemaker, or a member of the notional “data police.” It can also bring a claim of defamation. Gino filed a $25 million defamation lawsuit against Harvard and the Data Colada team not long after the bloggers attacked her work. (This past September, a judge dismissed the portion of her claims that involved the bloggers and the defamation claim against Harvard. She still has pending claims against the university for gender discrimination and breach of contract.) The risks are even greater for those who don’t have tenure. A junior academic who accuses someone else of fraud may antagonize the senior colleagues who serve on the boards and committees that make publishing decisions and determine funding and job appointments.

These risks for would-be critics reinforce an atmosphere of complacency. “It’s embarrassing how few protections we have against fraud and how easy it has been to fool us,” Simonsohn said in a 2023 webinar. He added, “We have done nothing to prevent it. Nothing.”

Like so many other scientific scandals, the one Schroeder had identified quickly sank into a swamp of closed-door reviews and taciturn committees. Schroeder says that Harvard Business School declined to investigate her evidence of data-tampering, citing a policy of not responding to allegations made more than six years after the misconduct is said to have occurred. (Harvard Business School’s head of communications, Mark Cautela, declined to comment.) Her efforts to address the issue through the University of Pennsylvania’s Office of Research Integrity likewise seemed fruitless. (A spokesperson for the Wharton School would not comment on “the existence or status of” any investigations.)

Retractions have a way of dragging out in science publishing. This one was no exception. Maryam Kouchaki, an expert on workplace ethics at Northwestern University’s Kellogg School of Management and co–editor in chief of the journal that published the “Don’t Stop Believing” paper, had first received the authors’ call to pull their work in August 2023. As the anniversary of that request drew near, Schroeder still had no idea how the suspect data would be handled, and whether Brooks—or anyone else—would be held responsible.

Finally, on October 1, the “Don’t Stop Believing” paper was removed from the scientific literature. The journal’s published notice laid out some basic conclusions from Schroeder’s audit: Studies 1a and 1b had indeed been run by Brooks, the raw data were not available, and the posted data for 1b showed “streaks of heart rate ratings that were unlikely to have occurred naturally.” Schroeder’s own contributions to the paper were also found to have some flaws: Data points had been dropped from her analysis without any explanation in the published text. (Although this practice wasn’t fully out-of-bounds given research standards at the time, the same behavior would today be understood as a form of “p-hacking”—a pernicious source of false-positive results.) But the notice did not say whether the fishy numbers from Study 1b had been fabricated, let alone by whom. Someone other than Brooks may have handled those data before publication, it suggested. “The journal could not investigate this study any further.”

Two days later, Schroeder posted to X a link to her full and final audit of the paper. “It took *hundreds* of hours of work to complete this retraction,” she wrote, in a thread that described the flaws in her own experiments and Studies 1a and 1b. “I am ashamed of helping publish this paper & how long it took to identify its issues,” the thread concluded. “I am not the same scientist I was 10 years ago. I hold myself accountable for correcting any inaccurate prior research findings and for updating my research practices to do better.” Her peers responded by lavishing her with public praise. One colleague called the self-audit “exemplary” and an “act of courage.” A prominent professor at Columbia Business School congratulated Schroeder for being “a cultural heroine, a role model for the rising generation.”

But amid this celebration of her unusual transparency, an important and related story had somehow gone unnoticed. In the course of scouting out the edges of the cheating scandal in her field, Schroeder had uncovered yet another case of seeming science fraud. And this time, she’d blown the whistle on herself.

That stunning revelation, unaccompanied by any posts on social media, had arrived in a muffled update to the Many Co-Authors Project website. Schroeder announced that she’d found “an issue” with one more paper that she’d produced with Gino. This one, “Enacting Rituals to Improve Self-Control,” came out in 2018 in the Journal of Personality and Social Psychology; its author list overlaps substantially with that of the earlier “Don’t Stop Believing” paper (though Brooks was not involved). Like the first, it describes a set of studies that purport to show the power of the ritual effect. Like the first, it includes at least one study for which data appear to have been altered. And like the first, its data anomalies have no apparent link to Gino.

The basic facts are laid out in a document that Schroeder put into an online repository, describing an internal audit that she conducted with the help of the lead author, Allen Ding Tian. (Tian did not respond to requests for comment.) The paper opens with a field experiment on women who were trying to lose weight. Schroeder, then in grad school at the University of Chicago, oversaw the work; participants were recruited at a campus gym.

Half of the women were instructed to perform a ritual before each meal for the next five days: They were to put their food into a pattern on their plate. The other half were not. Then Schroeder used a diet-tracking app to tally all the food that each woman reported eating, and found that the ones in the ritual group took in about 200 fewer calories a day, on average, than the others. But in 2023, when she started digging back into this research, she uncovered some discrepancies. According to her study’s raw materials, nine of the women who reported that they’d done the food-arranging ritual were listed on the data spreadsheet as being in the control group; six others were mislabeled in the opposite direction. When Schroeder fixed these errors for her audit, the ritual effect completely vanished. Now it looked as though the women who’d done the food-arranging had consumed a few more calories, on average, than the women who had not.

Mistakes happen in research; sometimes data get mixed up. These errors, though, appear to be intentional. The women whose data had been swapped fit a suspicious pattern: The ones whose numbers might have undermined the paper’s hypothesis were disproportionately affected. This is not a subtle thing; among the 43 women who reported that they’d done the ritual, the six most prolific eaters all got switched into the control group. Nick Brown and James Heathers, the scientific-integrity researchers, have each tried to figure out the odds that anything like the study’s published result could have been attained if the data had been switched at random. Brown’s analysis pegged the answer at one in 1 million. “Data manipulation makes sense as an explanation,” he told me. “No other explanation is immediately obvious to me.” Heathers said he felt “quite comfortable” in concluding that whatever went wrong with the experiment “was a directed process, not a random process.”

Whether or not the data alterations were intentional, their specific form—flipped conditions for a handful of participants, in a way that favored the hypothesis—matches up with data issues raised by Harvard Business School’s investigation into Gino’s work. Schroeder rejected that comparison when I brought it up, but she was willing to accept some blame. “I couldn’t feel worse about that paper and that study,” she told me. “I’m deeply ashamed of it.”

Still, she said that the source of the error wasn’t her. Her research assistants on the project may have caused the problem; Schroeder wonders if they got confused. She said that two RAs, both undergraduates, had recruited the women at the gym, and that the scene there was chaotic: Sometimes multiple people came up to them at once, and the undergrads may have had to make some changes on the fly, adjusting which participants were being put into which group for the study. Maybe things went wrong from there, Schroeder said. One or both RAs might have gotten ruffled as they tried to paper over inconsistencies in their record-keeping. They both knew what the experiment was meant to show, and how the data ought to look—so it’s possible that they peeked a little at the data and reassigned the numbers in the way that seemed correct. (Schroeder’s audit lays out other possibilities, but describes this one as the most likely.)

Schroeder’s account is certainly plausible, but it’s not a perfect fit with all of the facts. For one thing, the posted data indicate that during most days on which the study ran, the RAs had to deal with only a handful of participants—sometimes just two. How could they have gotten so bewildered?

Any further details seem unlikely to emerge. The paper was formally retracted in the February issue of the journal. Schroeder has chosen not to name the RAs who helped her with the study, and she told me that she hasn’t tried to contact them. “I just didn’t think it was appropriate,” she said. “It doesn’t seem like it would help matters at all.” By her account, neither one is currently in academia, and she did not discover any additional issues when she reviewed their other work. (I reached out to more than a dozen former RAs and lab managers who were thanked in Schroeder’s published papers from around this time. Five responded to my queries; all of them denied having helped with this experiment.) In the end, Schroeder said, she took the data at the assistants’ word. “I did not go in and change labels,” she told me. But she also said repeatedly that she doesn’t think her RAs should take the blame. “The responsibility rests with me, right? And so it was appropriate that I’m the one named in the retraction notice,” she said. Later in our conversation, she summed up her response: “I’ve tried to trace back as best I can what happened, and just be honest.”

Across the many monthsI spent reporting this story, I’d come to think of Schroeder as a paragon of scientific rigor. She has led a seminar on “Experimental Design and Research Methods” in a business program with a sterling reputation for its research standards. She’d helped set up the Many Co-Authors Project, and then pursued it as aggressively as anyone. (Simonsohn even told me that Schroeder’s look-at-everything approach was a little “overboard.”) I also knew that she was devoted to the dreary but important task of reproducing other people’s published work.

As for the dieting research, Schroeder had owned the awkward optics. “It looks weird,” she told me when we spoke in June. “It’s a weird error, and it looks consistent with changing things in the direction to get a result.” But weirder still was how that error came to light, through a detailed data audit that she’d undertaken of her own accord. Apparently, she’d gone to great effort to call attention to a damning set of facts. That alone could be taken as a sign of her commitment to transparency.

But in the months that followed, I couldn’t shake the feeling that another theory also fit the facts. Schroeder’s leading explanation for the issues in her work—An RA must have bungled the data—sounded distressingly familiar. Francesca Gino had offered up the same defense to Harvard’s investigators. The mere repetition of this story doesn’t mean that it’s invalid: Lab techs and assistants really do mishandle data on occasion, and they may of course engage in science fraud. But still.

As for Schroeder’s all-out focus on integrity, and her public efforts to police the scientific record, I came to understand that most of these had been adopted, all at once, in mid-2023, shortly after the Gino scandal broke. (The version of Schroeder’s résumé that was available on her webpage in the spring of 2023 does not describe any replication projects whatsoever.) That makes sense if the accusations changed the way she thought about her field—and she did describe them to me as “a wake-up call.” But here’s another explanation: Maybe Schroeder saw the Gino scandal as a warning that the data sleuths were on the march. Perhaps she figured that her own work might end up being scrutinized, and then, having gamed this out, she decided to be a data sleuth herself. She’d publicly commit to reexamining her colleagues’ work, doing audits of her own, and asking for corrections. This would be her play for amnesty during a crisis.

I spoke with Schroeder for the last time on the day before Halloween. She was notably composed when I confronted her with the possibility that she’d engaged in data-tampering herself. She repeated what she’d told me months before, that she definitely did not go in and change the numbers in her study. And she rejected the idea that her self-audits had been strategic, that she’d used them to divert attention from her own wrongdoing. “Honestly, it’s disturbing to hear you even lay it out,” she said. “Because I think if you were to look at my body of work and try to replicate it, I think my hit rate would be good.” She continued: “So to imply that I’ve actually been, I don’t know, doing a lot of fraudulent stuff myself for a long time, and this was a moment to come clean with it? I just don’t think the evidence bears that out.”

That wasn’t really what I’d meant to imply. The story I had in mind was more mundane—and in a sense more tragic. I went through it: Perhaps she’d fudged the results for a study just once or twice early in her career, and never again. Perhaps she’d been committed, ever since, to proper scientific methods. And perhaps she really did intend to fix some problems in her field.

Schroeder allowed that she’d been susceptible to certain research practices—excluding data, for example—that are now considered improper. So were many of her colleagues. In that sense, she’d been guilty of letting her judgment be distorted by the pressure to succeed. But I understood what she was saying: This was not the same as fraud.

Throughout our conversations, Schroeder had avoided stating outright that anyone in particular had committed fraud. But not all of her colleagues had been so cautious. Just a few days earlier, I’d received an unexpected message from Maurice Schweitzer, the senior Wharton business-school professor who oversaw Alison Wood Brooks’s “Don’t Stop Believing” research. Up to this point, he had not responded to my request for an interview, and I figured he’d chosen not to comment for this story. But he finally responded to a list of written questions. It was important for me to know, his email said, that Schroeder had “been involved in data tampering.” He included a link to the retraction notice for her paper on rituals and eating. When I asked Schweitzer to elaborate, he did not respond. (Schweitzer’s most recent academic work is focused on the damaging effects of gossip; one of his papers from 2024 is titled “The Interpersonal Costs of Revealing Others’ Secrets.”)

I laid this out for Schroeder on the phone. “Wow,” she said. “That’s unfortunate that he would say that.” She went silent for a long time. “Yeah, I’m sad he’s saying that.”

Another long silence followed. “I think that the narrative that you laid out, Dan, is going to have to be a possibility,” she said. “I don’t think there’s a way I can refute it, but I know what the truth is, and I think I did the right thing, with trying to clean the literature as much as I could.”

This is all too often where these stories end: A researcher will say that whatever really happened must forever be obscure. Dan Ariely told Business Insider in February 2024 : “I’ve spent a big part of the last two years trying to find out what happened. I haven’t been able to … I decided I have to move on with my life.” Schweitzer told me that the most relevant files for the “Don’t Stop Believing” paper are “long gone,” and that the chain of custody for its data simply can’t be tracked. (The Wharton School agreed, telling me that it “does not possess the requested data” for Study 1b, “as it falls outside its current data retention period.”) And now Schroeder had landed on a similar position.

It’s uncomfortable for a scientist to claim that the truth might be unknowable, just as it would be for a journalist, or any other truth-seeker by vocation. I daresay the facts regarding all of these cases may yet be amenable to further inquiry. The raw data from Study 1b may still exist, somewhere; if so, one might compare them with the posted spreadsheet to confirm that certain numbers had been altered. And Schroeder says she has the names of the RAs who worked on her dieting experiment; in theory, she could ask those people for their recollections of what happened. If figures aren’t checked, or questions aren’t asked, it’s by choice.

What feels out of reach is not so much the truth of any set of allegations, but their consequences. Gino has been placed on administrative leave, but in many other instances of suspected fraud, nothing happens. Both Brooks and Schroeder appear to be untouched. “The problem is that journal editors and institutions can be more concerned with their own prestige and reputation than finding out the truth,” Dennis Tourish, at the University of Sussex Business School, told me. “It can be easier to hope that this all just goes away and blows over and that somebody else will deal with it.”

Some degree of disillusionment was common among the academics I spoke with for this story. The early-career researcher in business academia told me that he has an “unhealthy hobby” of finding manipulated data. But now, he said, he’s giving up the fight. “At least for the time being, I’m done,” he told me. “Feeling like Sisyphus isn’t the most fulfilling experience.” A management professor who has followed all of these cases very closely gave this assessment: “I would say that distrust characterizes many people in the field—it’s all very depressing and demotivating.”

It’s possible that no one is more depressed and demotivated, at this point, than Juliana Schroeder. “To be honest with you, I’ve had some very low moments where I’m like, ‘Well, maybe this is not the right field for me, and I shouldn’t be in it,’ ” she said. “And to even have any errors in any of my papers is incredibly embarrassing, let alone one that looks like data-tampering.”

I asked her if there was anything more she wanted to say.

“I guess I just want to advocate for empathy and transparency—maybe even in that order. Scientists are imperfect people, and we need to do better, and we can do better.” Even the Many Co-Authors Project, she said, has been a huge missed opportunity. “It was sort of like a moment where everyone could have done self-reflection. Everyone could have looked at their papers and done the exercise I did. And people didn’t.”

Maybe the situation in her field would eventually improve, she said. “The optimistic point is, in the long arc of things, we’ll self-correct, even if we have no incentive to retract or take responsibility.”

“Do you believe that?” I asked.

“On my optimistic days, I believe it.”

“Is today an optimistic day?”

“Not really.”

This article appears in the January 2025 print edition with the headline “The Fraudulent Science of Success.”